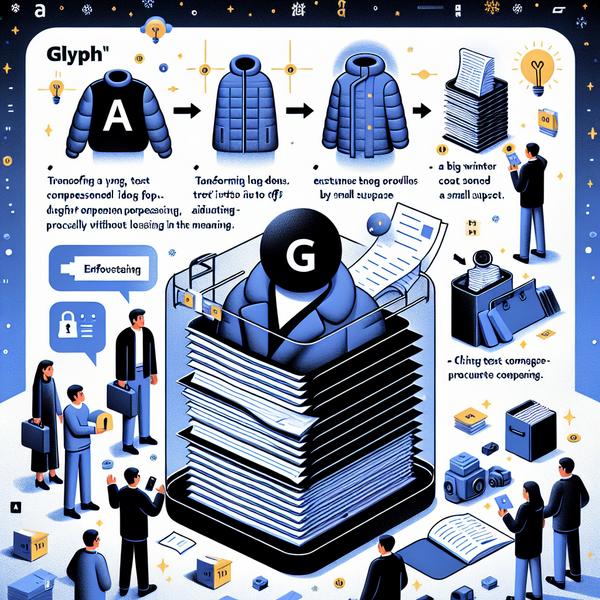

Ever thought about condensing long, dense texts into something more manageable? Zhipu AI's groundbreaking "Glyph" technology transforms lengthy text into visuals, enabling easier processing by Vision-Language Models (VLMs). This innovative system compresses text up to 4 times while maintaining clarity and context—a game-changer for efficiency in AI training, memory use, and computational speed. Glyph flips the script on traditional tech by visualizing text as images, reducing computational bottlenecks and simultaneously preserving semantic meaning. Let’s explore the magic of Glyph and its potential for the future!

Breaking Down Glyph: What Makes It Unique?

- Giant blocks of text are intimidating, and traditional methods that process such datasets often hit their limits fast. Glyph, however, innovates by transferring textual content into images that maintain layout and content meaning.

- Picture a massive document turned into neatly formatted pages—just like scanned PDFs—but these pages are "read" by a Vision-Language Model (VLM). It’s kind of like training a computer to read, understand, and visualize ideas instead of decoding boring, raw text.

- This bold shift is backed by compression technology, which reduces the heavy computational load. Glyph shortens token sequences effectively, resulting in a 3x to 4x compression boost on long text inputs without sacrificing accuracy.

- An analogy might be helpful here: think of trying to pack a bulky winter coat into your carry-on bag without compromising its fluffiness. Glyph is the vacuum-sealing tool that maintains functionality while fitting everything snugly.

- Thanks to its smart design, Glyph makes tasks like 1M-token level workload scaling—something previously thought impossible—a surprising reality for AI systems. It’s an efficiency game-changer!

How Does Glyph Train Machines to "See" Text?

- Glyph training happens in three stages, starting with continual pretraining. Imagine marinating a sponge in every possible flavor—this step ensures the AI systems absorb tons of diverse text types as images in cool, visually distinct layouts.

- Next comes an LLM-driven rendering search, which sounds complicated but is like playing with font styles in a Word document. It tweaks page size, font types, and spacing to find the perfect combination for clarity and compression.

- Finally, post-training includes supervised fine-tuning alongside Group Relative Policy Optimization (GRPO). These sound fancy, but think of them as proofreading stages for AI, ensuring the "pictures of text" stay super accurate.

- For instance, subtle tweaks like adjusting page resolution or margin alignment make a massive difference in how fluently a VLM processes the data.

- By aligning visual tokens with textual meaning, Glyph equips models to "visualize" rather than just "read," providing unparalleled adaptability to different tasks and styles of data presentation.

Performance That Speaks Volumes: Glyph’s Results

- How does Glyph’s visual-text compression hold up? Real-world tests suggest it doesn’t just hold up—it excels! On benchmarks like LongBench, Glyph achieves an approximate 3.3x effective compression rate, and for some tasks, performance surpasses even a 5x compression ratio.

- Imagine a high-speed train skimming over its tracks—Glyph achieves almost 5x faster prefilling speed, while decoding tasks are 4.4x quicker compared to conventional models.

- A mind-blowing fact: increasing DPI resolution during AI inference helps amplify accuracy. For example, fine-tuning the resolution up to 120 DPI increased the model's reliability and performance for tackling complex tasks.

- This efficiency isn’t just theoretical; imagine cutting your computer’s memory and energy requirements by up to half while handling bigger workloads. This scenario is a dream come true for industries relying on high-scale AI tech, like healthcare or legal archives!

- Thanks to Glyph, massive academic reports, blog post series, or even multi-layered chat histories can easily fit into models and be processed faster than ever.

Real-World Applications of Glyph: Who Gains the Most?

- If you’ve ever digitized receipts or scoured scanned PDFs, you’ll realize that Glyph’s multimodal document literacy has practical value. By training AIs to treat visuals of text like well-organized blocks of meaning, industries get smarter workflows.

- Take law firms archiving legal documents—rather than raw, heavy files with complex annotations, Glyph simplifies by compressing and visualizing context seamlessly for AI analysis.

- Or think about education! Students preparing heavily annotated study materials will no longer stress over messy overlaps—they can rely on AI systems that auto-organize context-heavy textbooks.

- The downside? Extreme font compression and aggressive text layouts can trip up Glyph’s accuracy. On rare occasions, harder-to-read type arrangements create minor lags in clarity.

- To counter this, the team behind Glyph ensures ultra-clean server-side rendering setups. These setups “teach” AIs to handle structured inputs as precisely as a recipe card, without potential gaps in comprehension due to typography error margins.

Why Glyph Is the Future of AI Context Scaling

- The simple brilliance of Glyph lies in its innovative reframing of a traditional computational problem. Think of reducing raw data into images—simpler and speedier—without losing rich semantics. No other system visualizes compression this way yet.

- Beyond just improving AI workflows, such as automation or big-data analysis tools, this tech appeals to eco-friendly AI goals by heavily cutting memory and processing power demands.

- In corporate scenarios, AI-enabled systems leveraging advanced Glyph techniques may dominate how future systems organize multi-million data-points warehouses, e.g., developing SaaS backend optimizations.

- Moreover, this idea is a foundation for multimodal interpreting machines: VLM layers interact as AI agents bridging vision, language, and inferencing power all at once.

- As tools like GPT-like assistants expand toward billion-parameter strengths, Glyph gives researchers a roadmap to reduce costs (compute-wise), increase usability, and enable simpler deployments without sacrificing solution speed.