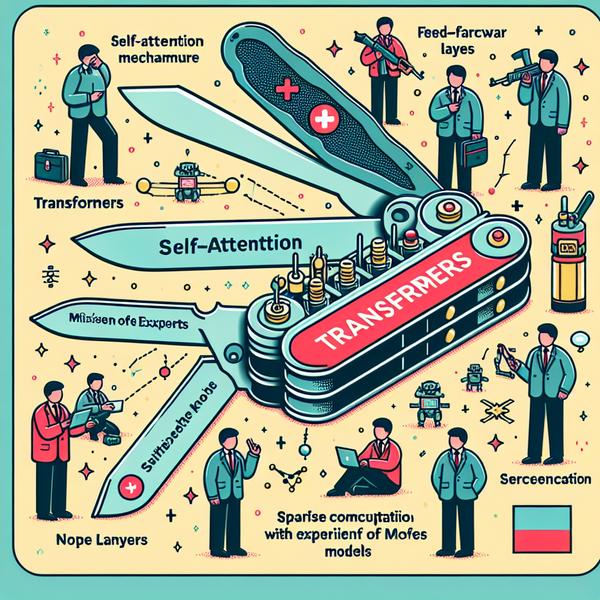

Transformers and Mixture of Experts (MoE) are two advanced AI model architectures that are revolutionizing how machine learning systems operate. Both architectures utilize self-attention mechanisms and feed-forward layers, but they differ in their use of parameters, computation, and scalability. While Transformers rely on dense computation, MoE models implement sparse computation with expert routing, allowing for enhanced specialization and lower runtime costs. However, MoE models also face unique challenges like expert collapse and training complexity, making their implementations more sophisticated. This blog dives deep into these concepts, discussing their differences, benefits, and challenges in the AI landscape.

Understanding the Core Concept of Transformers

- Transformers have become a backbone for many AI tasks, including natural language processing (NLP) and image recognition. They are known for their scalability and reliability in handling large datasets.

- The architecture of a Transformer is based on self-attention layers, where every token in the input sequence interacts with every other token. This ensures that the model understands the context as a whole.

- Transformers activate all parameters across all layers during inference. This dense computation ensures high performance but demands significant hardware resources for large-scale models like GPT-4 or BERT.

- Think of a Transformer like a Swiss Army knife—it is versatile and handles tasks comprehensively. However, its all-inclusive nature means it can require more energy and resources, much like using a large toolbox for every small fix.

How Mixture of Experts (MoE) Models Work

- The MoE architecture differs by replacing a single feed-forward network in each block with multiple smaller feed-forward networks, or "experts."

- A special routing network selects the top K experts based on the input token, which means only a fraction of the total parameters is used for each token. This results in sparse computation.

- Imagine a library with many specialized books. If you only pick the best ones for your topic, you can learn more efficiently. MoE models work similarly by selecting only the experts needed for a task.

- The unique routing mechanism not only reduces inference costs but also expands the model's capacity without overloading the system. For example, the Mixtral 8x7B MoE model has 46.7 billion parameters but uses only about 13 billion parameters per token.

- MoE's ability to scale makes it promising for applications like conversational AI, where users expect quick responses without compromising accuracy.

The Challenges of MoE: Overcoming Expert Collapse

- Despite its advantages, MoE comes with its own set of challenges. The most common issue is "expert collapse," where certain experts are overused while others are under-trained.

- To solve this, MoE models employ strategies like noise injection in routing, Top-K masking, and setting limits on expert capacity. These not only enhance balance but also prevent the model from becoming biased.

- For example, think of a group of chefs in a kitchen. If one chef is assigned all the tasks, the others don’t get to practice or learn. Balancing the load ensures all chefs stay active and improve their skills.

- These techniques keep the MoE architectures relevant and efficient, especially in fields like robotics and autonomous systems, where precision and adaptability are key.

- However, these mechanisms also make MoE models more complex to train and manage compared to Transformers, requiring specialized expertise and computational resources.

Scaling AI: The Benefits of MoE in Large-Scale Systems

- MoE models are particularly beneficial for scaling AI capabilities. By activating only a fraction of their parameters, they achieve "bigger brains" without increasing runtime costs.

- This scalability makes them ideal for cutting-edge research fields like NeurIPS, where large datasets and diverse tasks push existing architectures to their limits.

- In practical scenarios, this means better multitasking abilities. For example, an AI assistant integrated into devices like smart speakers can simultaneously process commands, deliver personalized recommendations, and manage resources efficiently using MoE.

- Compared to Transformers, MoE provides a higher model capacity at a lower energy footprint, supporting the global trend toward sustainable AI solutions.

- Whether it’s for customer service agents or advanced voice AI applications, MoE holds great promise in driving the future of intelligent systems forward.

Real-World Applications and Future Directions

- The impact of both Transformers and MoE models is not limited to theoretical research—they are actively shaping real-world technology.

- Transformers like OpenAI's GPT models have demonstrated their prowess in content creation, coding assistance, and linguistic tasks, while MoE is pushing boundaries in specialized AI tasks with its sparse computation capabilities.

- Companies like Microsoft and Google are already leveraging hybrid architectures that combine the strengths of both to create more adaptive and dynamic systems.

- As AI continues to grow, integrating these technologies into domains such as education, healthcare, and finance may redefine how these sectors operate.

- Imagine a future classroom where AI personalizes lessons for every student, or a healthcare system that provides faster, more accurate diagnostics—all powered by a mix of Transformer and MoE technologies.