In today's rapidly-evolving tech space, intelligent problem-solving is at the heart of many innovations. This tutorial delves into the fascinating world of exploration strategies in reinforcement learning through agents like Q-Learning, Upper Confidence Bound (UCB), and Monte Carlo Tree Search (MCTS). Imagine a virtual grid world where these agents navigate a maze filled with obstacles to reach a goal — a simulation teeming with challenges and opportunities for optimization! Through this tutorial, we learn how these agents collaboratively adopt unique strategies to balance exploration and exploitation. If you've ever been puzzled about how artificial intelligence mimics decision-making in real-world scenarios, you're in for an exciting journey that simplifies the complex.

Understanding the Landscape: Building the Grid World

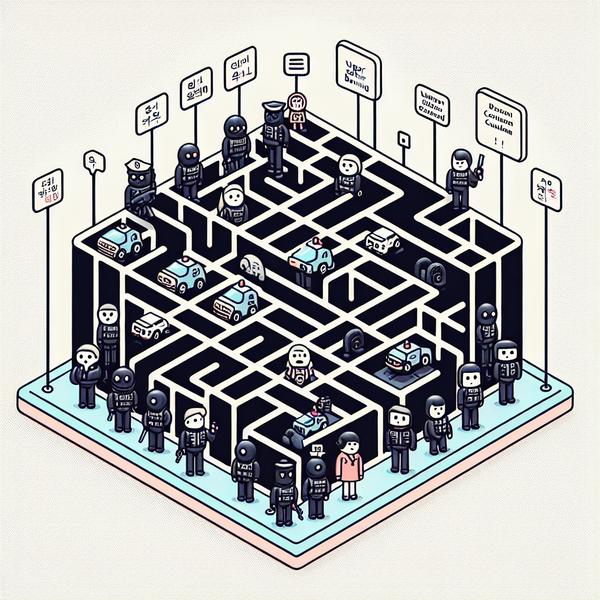

- The journey begins by constructing a grid world, which acts as the environment for our agents. Think of it as a digital maze filled with challenges.

- The grid is a square-shaped board where the agent must navigate from the starting point at the top-left corner (0,0) to the goal at the bottom-right corner (size-1, size-1).

- Random obstacles are scattered throughout the environment, making it tricky for the agent to find a clear path. These obstacles represent real-world roadblocks, like traffic or unexpected detours.

- The grid world is brought to life using Python. For instance, libraries like

numpycreate the matrix-like grid, and functions help reset or step through the agent’s position: - Fun analogy: Imagine you're playing a treasure-hunt game. The treasure is your goal, and the randomly placed trees, rocks, and rivers are the obstacles you need to navigate!

Diving Deep: The Q-Learning Agent

- Q-Learning lies at the heart of many artificial intelligence systems. It works by learning through trial and error using a concept called reward maximization.

- This agent relies on an "epsilon-greedy approach." At first, it loves to explore unpredictable paths (random actions!), but as it learns more about the environment, it gradually starts exploiting what works best.

- The agent builds something called a "Q-table." This table stores all possible actions it can take in each state (or position) and their expected rewards.

- The code snippet below defines this behavior using Python — see how the agent makes decisions and updates its Q-values:

- Here's the fun part: imagine this as a food delivery app. Each Q-value represents how good or bad it is to take a certain street at a given time. The delivery algorithm starts guessing but learns which roads lead to faster deliveries!

Confidence Is Key: Upper Confidence Bound (UCB) Strategy

- UCB agents are like meticulous planners — they not only try to find high-reward paths but also explore those they haven’t often visited. They balance curiosity (testing new things) and confidence in their existing knowledge.

- Think of it like choosing a restaurant. You can keep going to your favorite place with high ratings (reward) but might miss out on a hidden gem. UCB convinces you to take calculated risks and try something new once in a while!

- The agent calculates a "confidence bonus" for actions less explored, which gives those options a chance of being selected even if they look less promising initially.

- Python's

mathlibrary comes in handy here for calculating exploration bonuses through logarithmic functions. - Real-world example: Ever tried a new TV show on streaming platforms after seeing their "recommended because you haven’t tried this genre" section? The UCB agent mirrors this curiosity-induced recommendation strategy.

Planning for the Future: Monte Carlo Tree Search (MCTS)

- MCTS takes everything to the next level. Instead of pure trial and error, it uses simulations to predict possible future outcomes. It's like a chess player thinking 10 moves ahead before deciding their next action.

- The agent builds a tree structure where each branch represents potential states (or positions on the grid). The agent explores these and expands the most promising ones.

- It uses principles of backpropagation to learn from these simulations and hone its decision-making skills.

- The Python implementation showcases a structured approach where the agent thinks ahead by simulating scenarios multiple times with specific constraints.

- Relatable example: Remember planning a road trip? You consider alternative routes, weigh pros and cons of each (traffic, gas stations, food stops), and pick a path that seems optimal. That's MCTS for you!

Visualizing Success: Training and Comparing the Agents

- Once all three agents (Q-Learning, UCB, and MCTS) are ready, they’re trained on the grid world to learn how to overcome obstacles and reach the goal efficiently.

- During training, performance metrics such as rewards over multiple episodes are logged to evaluate how quickly and reliably each agent adapts to the dynamic grid environment.

- Graphs and statistics reveal fascinating insights—MCTS often outsmarts the other two due to its foresight, but Q-Learning and UCB shine in simplicity and adaptability.

- These visualizations not only showcase the reward graph over episodes but also highlight how exploration (trying random paths) balances with exploitation (choosing the best path).

- Bonus tip: The colorful plots make it feel like a race where you track how well each agent performs round after round, rooting for your favorite one!

Conclusion

- This tutorial takes you step-by-step through the fascinating world of exploration agents.

- You now understand the grid world design, Q-Learning's steady path to rewards, UCB's strategic balance, and MCTS’s simulation-based brilliance.

- Combining these techniques can pave the way for smarter, faster, and more adaptable decision-making systems — applicable in industries from robotics to online services.

- If you're new to reinforcement learning, this should set a strong foundation for tackling more complex scenarios!